Our Clinical Research 101 series takes an in-depth look at key steps and tips for navigating the clinical research process. The twelfth instalment in our Clinical Research 101 series is by Glenyth Caragata, Evaluation Specialist at CHÉOS. The following is a brief overview of regulatory inspections and how to prepare for them.

Dr. Caragata is an Evaluation Specialist working with Dr. Amy Salmon, Program Head of Knowledge Translation at CHÉOS. The Centre’s research support staff are experts in the regulatory, policy, budgeting, and implementation requirements for clinical research studies. If you would like to inquire about our services, please submit your request here.

eval·u·a·tion:

the process of judging something’s quality, importance, or value

(Cambridge Dictionary)

What is it?

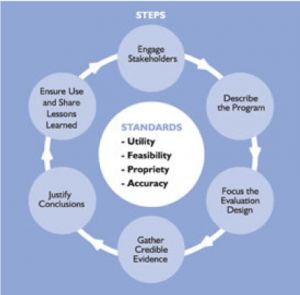

Evaluation studies generally have a broader scope than traditional clinical trials*, and they typically address several issues. Although, like clinical trials, evaluation studies could include improved patient outcomes amongst their goals, they also address questions such as impacts on other patient characteristics or circumstances, the design of programs using the intervention, how the intervention is being implemented, and the overall cost and efficiency of the approach.

Evaluation studies often define several short-term and intermediate-term goals for a number of domains (e.g., patient, family, community) as well as a number of long-term goals that support the overall strategy of the organization. The ultimate goal of an evaluation is to improve the approach to solving a problem or set of problems.

* A research study in which one or more human subjects are prospectively assigned to one or more interventions (which may include placebo or other control) to evaluate the effects of those interventions on health-related biomedical or behavioural outcomes, more information here. Note that clinical trials are changing; patient input is increasingly included, and more “real world”* clinical trials are being conducted.

* For information on traditional clinical trials versus real world clinical trials, see the BC SUPPORT Unit.

Why is it important and when do I need this?

There are five main types of classical evaluation studies, explained below. Each is designed for different purposes and to address different questions — a thorough evaluation uses a combination. These types of questions generally cannot be answered by clinical trials; however, results from clinical trials may contribute a proportion of the knowledge incorporated into some evaluation studies.

“What should I do to help my patients?”

Formative evaluation is used before a program is implemented, or during its design phase. It addresses questions such as what type of program is needed by your particular patient group. For example, although you may have adopted a “proven” successful program from the literature, a formative evaluation will examine whether it is likely to work for your patients. Formative evaluations generate baseline data, identify areas of improvement to your program plans, and can give insights on what the program’s priorities should be. Formative evaluations of clinical trials can also be used to identify inherent biases in study plans.

Is my intervention functioning properly?

Process evaluation looks at how your program is being implemented in reality compared to how you planned it. The data it generates can identify inefficiencies and inconsistencies in delivery, effectiveness of procedures, and areas for improvement. For example, upon implementing a quality improvement program, process evaluations can be included to validate that procedures have been adhered to. Have people administering the program adopted an ‘easier’ way to do a task than outlined in the procedural documents? Have new biases been introduced? How will these factors affect results?

Did the intervention work?

Outcome evaluation focuses on patient changes that have occurred over time. This sounds very close to the purpose of clinical trials, but with one important distinction. In clinical trials, investigators attempt to control for external factors that could affect outcomes, so that any changes in the participant’s health should be attributable to the drug. Data primarily on the disease status are collected and analyzed. In contrast, outcome evaluation studies also measure outcomes, but rather than assuming the changes arise from the intervention, they attempt to determine to what degree those outcomes are attributable to the intervention itself, versus other factors. Outcome evaluations tend to collect a broader set of data to determine what other changes have occurred in the study subjects. Furthermore, they are more focused on the use of the intervention under real-world conditions by a diverse sample of people, rather than the select controlled group of the clinical trials.

Could others benefit from this intervention?

Summative evaluations are conducted after a period of time to help decide whether to continue, expand, or end a program. They address questions such as, “Can this intervention be applied to other populations in other contexts?” For example, a summative evaluation would identify factors in a clinical trial that could limit the intervention’s applicability to other situations.

Has it really made a difference?

And finally, impact evaluations focus on long-term, sustained changes as a result of the program, including both intended and unintended consequences. For example, did introduction of a new drug improve the quality of life for the target population, or did it produce other problems not foreseen during the clinical trials? Did other uses for the drug arise? Was the drug cost-effective and sustainable over the long term?

Clinical Research Study vs Evaluation Study

Clinical research studies and evaluation studies share some characteristics, and in fact, clinical research studies can form a part of evaluation studies.

Clinical trials traditionally use an experimental randomized controlled trial (RCT) study design that seeks to disprove the hypothesis, “There is no difference between the treatment group and the control group”. Study participants are recruited, screened for specific characteristics, and randomized to a treatment or a control group. The aim is to construct treatment and control groups that are as similar as possible.One of the questions often addressed by clinical trials is: “Has the intervention resulted in a different level of change in a specific characteristic (the disease) between the group receiving the treatment and the group not receiving the treatment?”

Evaluation studies use a variety of methods including both qualitative and quantitative, experimental and quasi-experimental approaches. In evaluation studies, a logic modelis often developed to outline how an intervention is designed to address the organization’s goals. It outlines the resources used (e.g., people, money, technology), activities undertaken (e.g., distributing information, holding training sessions), and outputs generated (e.g., number of pills distributed), and validates the logical flow between these components. One of the questions often addressed by evaluations is known as the counterfactual: “If not for this intervention, what would likely have happened?”

Evaluation is a broad discipline that can be applied to a wide variety of situations, from very small projects to huge global initiatives. It requires an “expansive mindset” or way of thinking that takes into consideration the big picture as well as the details. In addition to those described above, there are several other approaches to evaluation designed to meet the unique needs of different organizations and situations.

Links to references and resources:

CDC: Introduction to Program Evaluation for Public Health Programs: A Self-Study Guide

WHO: Evaluation Practice Handbook

Canadian Evaluation Society: What is Evaluation?

Treasury Board of Canada: Program Evaluation Methods

Gov. of Ontario: The Health Planner’s Toolkit: Evaluation (Module 6)[/vc_column_text][/vc_column][/vc_row]